|

My name is Zewen Xu. I am a Ph.D student in the Institute of Automation, Chinese Academy of Sciences (CASIA), under the supervision of professor Yihong Wu and assistant researcher Hao Wei (2020.9 - now). My previous research focused on visual–inertial odometry, pose estimation, and simultaneous localization and mapping (SLAM). I am now expanding my interests to feed-forward 3D reconstruction and scene understanding, particularly in scene graph construction and vision–language navigation. I am looking for cooperation. Contact me if you are interested in the above topics. |

|

|

- [2025.12] 🎉 One paper: DRT-VI-OSTC is accepted by IEEE TRO.

- [2025.05] 🎉 One paper: DOGE is accepted by ICRA2025.

- [2023.10] 🎉 One paper: PLPL-VIO is accepted by IROS2023

|

* denotes equal contribution |

Journal Paper

|

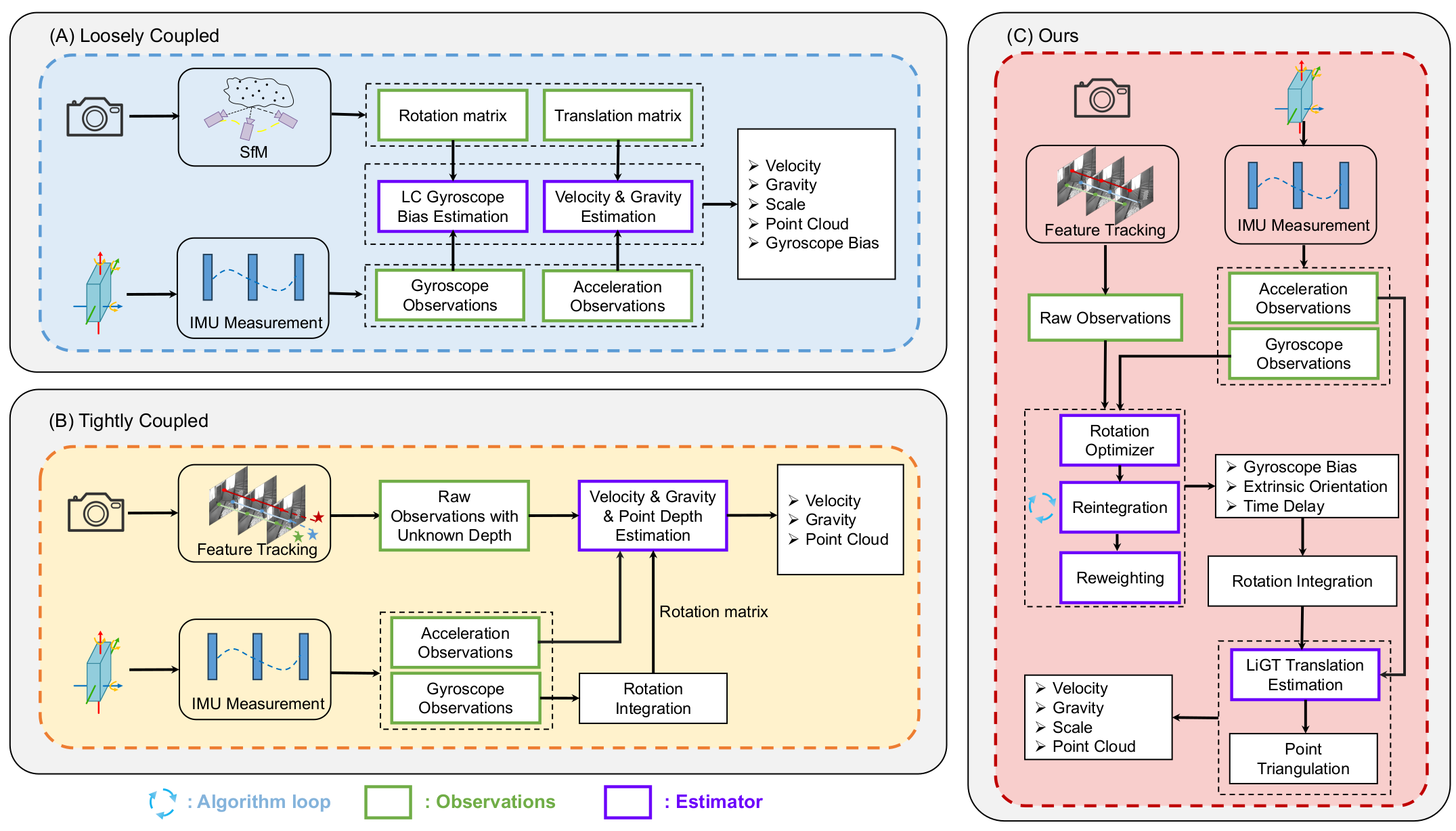

Bo Xu*, Zewen Xu*, Yijia He, zhangpeng Ouyang, Hao Wei, Yihong Wu, Jiangcheng Li, Hongdong Li IEEE Transactions on Robotics (TRO) Paper / Code (coming soon) / Website DetailsWe propose a novel initialization and online spatial-temporal calibration method for visual-inertial odometry (VIO), which decouples rotation and translation estimation to achieve higher accuracy and better robustness. Existing initialization methods suffer from limited accuracy or robustness (e.g., in scenarios with small translational motion) and rarely integrate simultaneous spatial-temporal calibration during initialization, despite its considerable practical value. Our proposed method leverages rotation-translation decoupling constraints to enable simultaneous estimation of gyroscope bias, extrinsic rotation, and camera-IMU time offset—even under pure rotational motion. Moreover, we are the first to conduct observability analysis on rotational constraints in rotation-translation decoupling methods, experimentally identifying the unobservable state-space directions under three degenerate motions within our approach. We also perform extensive experiments to delineate practical parameter solution boundaries for our method, with both efforts substantially enhancing the overall practical applicability of decoupling-based methods. Extensive experiments on simulated and real-world datasets demonstrate that our method outperforms state-of-the-art approaches in accuracy and robustness while maintaining computational efficiency. Furthermore, experiments verify that it significantly improves convergence in VIO systems. The source code will be made available to the community. |

Conference Paper

|

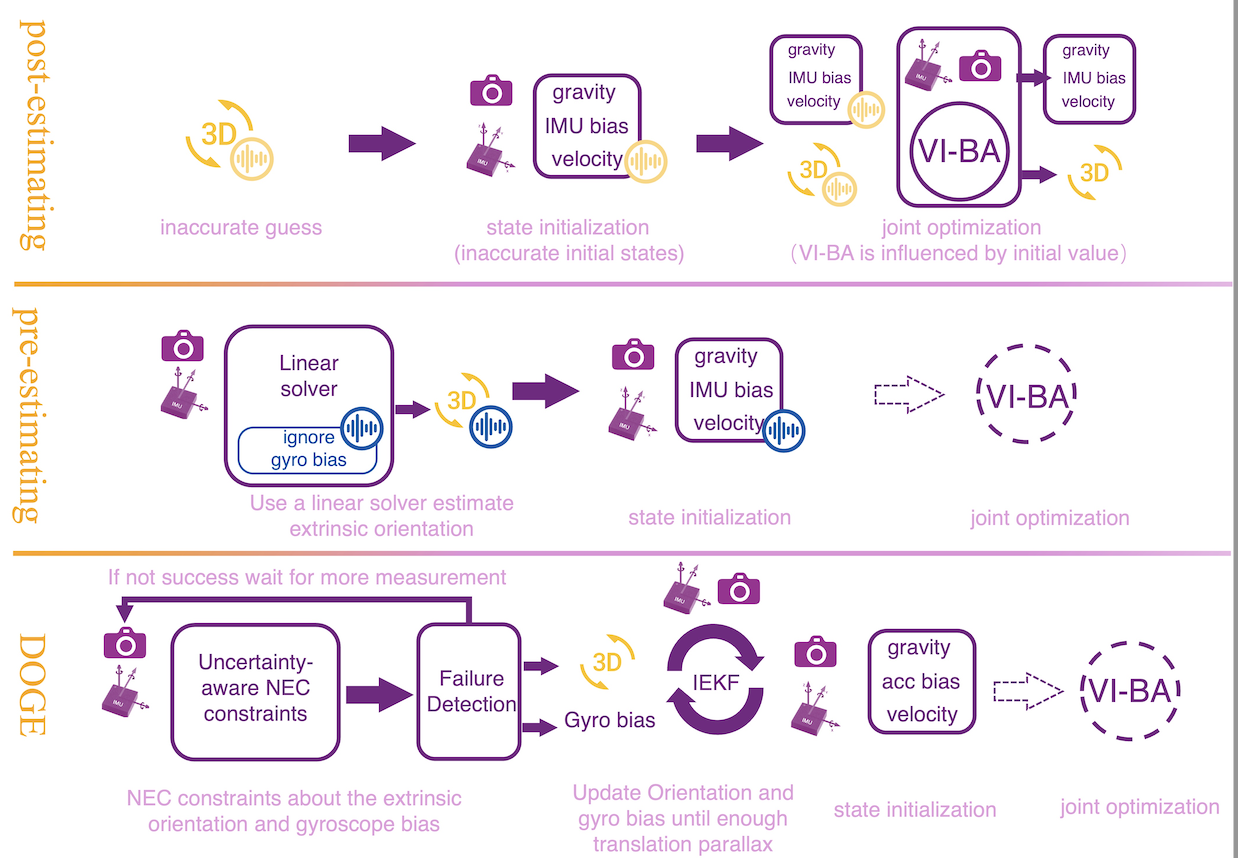

Zewen Xu, Yijia He, Hao Wei, Yihong Wu ICRA2025 Paper DetailsMost existing visual-inertial odometry (VIO) initialization methods rely on accurate pre-calibrated extrinsic parameters. However, during long-term use, irreversible structural deformation caused by temperature changes, mechanical squeezing, etc. will cause changes in extrinsic parameters, especially in the rotational part. Existing initialization methods that simultaneously estimate extrinsic parameters suffer from poor robustness, low precision, and long initialization latency due to the need for sufficient translational motion. To address these problems, we propose a novel VIO initialization method, which jointly considers extrinsic orientation and gyroscope bias within the normal epipolar constraints, achieving higher precision and better robustness without delayed rotational calibration. First, a rotation-only constraint is designed for extrinsic orientation and gyroscope bias estimation, which tightly couples gyroscope measurements and visual observations and can be solved in pure-rotation cases. Second, we propose a weighting strategy together with a failure detection strategy to enhance the precision and robustness of the estimator. Finally, we leverage Maximum A Posteriori to refine the results before enough translation parallax comes. Extensive experiments have demonstrated that our method outperforms the state-of-the-art methods in both accuracy and robustness while maintaining competitive efficiency. |

|

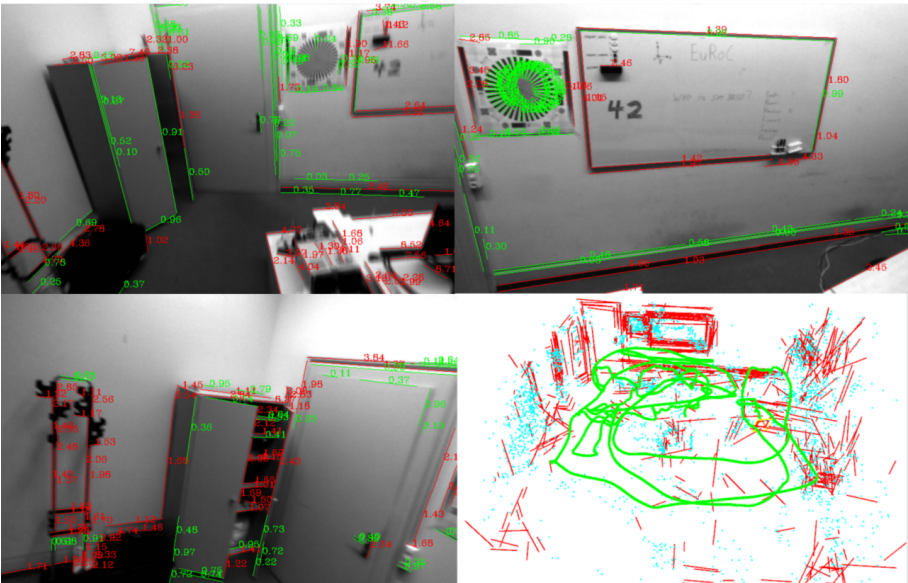

Zewen Xu, Hao Wei, Fulin Tang, Yidi Zhang, Yihong Wu, Gang Ma, Shuzhe Wu, Xin Jin IROS2023 Paper DetailsPoint and line features are complementary in Visual-Inertial Odometry (VIO) or Visual-Inertial Simultaneous Localization And Mapping (VI-SLAM) systems. The advantage of combining these two types of features relies on their proper weighting in the cost function, usually set by their uncertainty. Compared with point features, setting line segment endpoints' uncertainty with isotropic distribution is unreasonable. But the uncertainty of line feature observation, especially for the endpoints' uncertainty along the line, is difficult to set due to occlusion and fragmentation problems. In this article, we use infinite lines as the line feature observations and prove that the uncertainty of these observations is only related to the vertical uncertainty of the endpoints, thus avoiding setting the parallel uncertainty of the endpoints. Besides, we introduce a novel consistent measurement model for line features. Furthermore, for long-time constraints, we add 3D line segments into the state vector and derive how to update them properly. Finally, we construct a point-line-based VIO system that takes into account the uncertainty of line feature observations and the consistency of line feature measurements. The proposed VIO system is validated on two public datasets. The results show that the proposed method obtains the best accuracy compared with the state-of-the-art point-based VIO systems (OpenVINS, VINS-Mono), a point-line-based VIO system (PL-VINS), and a structural line-based system (StructVIO). |

Conference Paper

|

Conference Reviewers: IROS2023-2025 ICRA2025 Journal Reviewers: IEEE RAL |

Website template

Last updated: Dec. 2025